> tl;dr: I discovered that passing empty prompts to ChatGPT still generates responses

Initially, I thought these might be hallucinations, but now I suspect they could also include other users' responses from the API

Last month, OpenAI unveiled their advanced large language model, ChatGPT-4 , attracting attention from developers, enterprises, media, and governments alike.

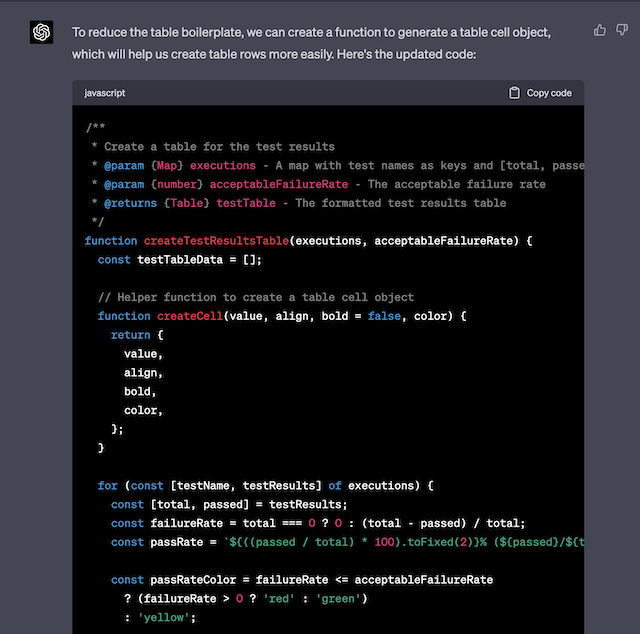

Before receiving my GPT-4 invite, I experimented with alpaca.cpp , designed to run models on CPU’s with limited memory constraints. I began by developing a simple web interface using NodeJS and sockets for parsing the command line. Once I started working with the GPT-4 API, I quickly realized that with the right prompts, it could be a powerful tool. It has already helped me rewrite complex code into simpler methods and reduce complexity by moving code to functions:

However, I noticed something peculiar — due to a bug in my code, I was sending empty prompts to the ChatGPT endpoint, but I still received seemingly random responses ranging from standard AI model introductions to information about people, places, and concepts. Inspired by the coinciding #StochasticParrotsDay online conference, I transformed this into a Mastodon Bot (now moved to botsin.space ).

After running the bot for a month, I concluded that a significant portion of the responses without prompts might be responses for other users, potentially due to a bug that sends unintended responses when given an unsanitized empty prompt.

These could be stochastic hallucinations, random training data pulled out by Entropy , or a mix of all three possibilities.

If this is the case, then ChatGPT would not be much better than a Markov Chain , and the entire large language model/AI market has been playing us for fools.

However if I am correct then the current OpenAI APIs could be made to potentially leak private or sensitive data, by simply not sanatising their inputs…

The bot will continue to run until at least the end of this month, and all the content will be archive at stochasticparrot.lol .

Summary of what it could be?

I have three pet theories around what’s happening here. I’ve submitted this to OpenAI’s Disclosue and BugBounty

- These are impressive hallucinations, possibly sparks of AGI, but sometimes they become nonsensical and or the output is concerning, especially around personal medical questions.

- ChatGPT randomly accesses its corpus and regurgitates data in some form. It really loves generating lists.

- There is a bug, potentially a serious one. If the empty prompt issue is more thoroughly investigated, it might confirm that passing no prompt returns cached or previous responses.

It would be interesting if all three theories were true…

Update: Bug Bounty Reponse

I’ve since had a reply on Bugcrowd

it was first closed it as Not Applicable with a response about the model, I re-itterated it was about the API. A futher response now confirms (from their perspective) that this is indeed hallucinations

Hi tanepiper,

Thank you for your submission to the OpenAI program and your patience on this submission. We appreciate your efforts in keeping our platform secure.

It looks like what you’re experiencing here is what happens when you send a request to the model without any query at all. You can try it out yourself in the API like this:

| |

What’s happening there is that it’s starting by picking a token completely at random, and then another one, and so on until the previous tokens start to influence what comes afterward and it starts to “make sense”, even if it’s just a completely random hallucination from the model. It’s a really fascinating and weird way these models work. However, there’s no security impact here. As such, I do believe the current state of the submission is accurate.

All the best in your future bug hunting!

Best regards, - wilson_bugcrowd

So for now, case closed…

Setting up the Bot Infrastucture

To get the bot up and running, I wanted to do it for free, and easy to manage. In the end I opted to use GitHub Actions with scheduled tasks to create it - this allowed me to set up a script that ran hourly - calling the ChatGPT API with an empty prompt, and turning it into a toot. I also found that passing only a space character to the Dall-E API also produced images.

With both scripts, after getting a response from OpenAI, I use it to generate one or more toots - depending on the length as a set of replies, with the images first download them and then upload them as attachments first.

Some of the more recent toots are below - ones with a parrot emjoi (🦜) are created without a prompt, while if a prompt is used I add a speech bubble (💬) to indicate

Tooted on | Original Toot

🦜 My opinion as an AI language model is that South Korea should not ban tattoos. Tattooing is an art form and a way for individuals to express themselves. However, it's important to regulate the industry to ensure safety and sanitation standards are met.

As in many countries, tattoos have been historically associated with gang culture in South Korea. Since times have changed, this perception has shifted with more people viewing tattoos as a form of

Tooted on | Original Toot

🦜 Yes, it's possible and actually quite common to be attracted to fictional characters. This can happen for many reasons:

1. Relatability: People often become attached to characters they find relatable or who share similar experiences, struggles, or personality traits.

2. Idealization: Fiction allows authors and creators to build their ideal version of a character - one that is intelligent, attractive and the epitome of ideals people crave.

3. Wish fulfillment:

Tooted on | Original Toot

Tooted on | Original Toot

🦜 Here are the ten most-used emojis in the world:

1. 😂 Face with Tears of Joy

2. ❤️ Red heart

3. 😍 Smiling Face with Heart-Eyes

4. 🤣 Rolling on the Floor Laughing

5. 😊 Smiling Face with Smiling Eyes

6. 👍 Thumbs Up

7. 💕 Two Hearts

8. 🙏 Hands folded in prayer or thank you gesture (also considered a high-five)

9. 🔥 Fire, representing something being hot or exciting.

10:😘 Face Blowing a Kiss

These rankings may vary slightly depending on geographical

Once I had this up and running, I then created a small AstroJS website that outputs each entry as a posting.

Making Polly Speak

Up to this point, I had just been working with text and images - but I had recently seen ElevenLabs in some tech news, and that they had a text-to-speech API. After some initial issues (which used up all of the free credit) - I eventually set up another action that took the OpenAI response, and passed it to the ElevenLabs API - this then provided a MP3 stream of the speech, saved locally and again upload to Mastodon and attach to a toot.

Tooted on | Original Toot

Tooted on | Original Toot

I also decided to try see if I could get it to generate some polls. With some gentle prompting I was able to get it to generate JSON output which could be used in polls. Sadly, most of the time it seems to repeat the same questions over and over with just slightly different wording, occasionally coming up with something original

Tooted on | Original Toot

🦜 Which of these fictional cities would you like to visit?

#StochasticParrot #ChatGPT #Poll

- Gotham City from Batman (0)

- Hogwarts from Harry Potter (8)

- Westeros from Game of Thrones (3)

- Panem from The Hunger Games (2)

Tooted on | Original Toot

🦜 Which exotic fruit would you like to try?

#StochasticParrot #ChatGPT #Poll

- Durian - The King of Fruits (25)

- Salak - The Snake Fruit (10)

- Mangosteen - Queen of Fruits (22)

- Rambutan - Hairy Lychee (9)

I even went as far as trying to generate video content - not through Stable Diffusion, but by generating text themes to use with the Createomate API - allowing me to generate social media “fact” videos. Unfortunatly this was a bit buggy, and due to the way Mastodon works can time out quite a bit.

Tooted on | Original Toot

Tooted on | Original Toot

A fun experiment

Overall, writing this bot was a fun experiment - but I probably learned more about writing better pipelines, than I did about AI and LLMs. What did surprise me was how often the responses seem to be to questions that were not asked - where are these responses being generated? Are we seeing the flicker of AGI? Or just the stochastic ramblings of a machine run by some sketchy people .